Bulk Upload Documents into SharePoint from Excel

To get files from your file system into a SharePoint document library you have a couple of options available. The following guide covers how to synchronise files using an excel spreadsheet into a SharePoint Document Library.

We take the idea that you have a spreadsheet or CSV file containing the file names, paths to each file and any additional metadata. This could be user generated or created as an export from another internal system. In brief you just connect to your spreadsheet, connect to SharePoint, map the columns, compare and sync.

The example shown below uploads only a small sample of documents, however you can use this method to bulk upload thousands of documents.

Requirements

Before getting started you will need to ensure that you have the following:

- Windows 10 or Windows Server

- Downloaded & Installed Data Synchronisation Studio

- Have a Document Library created in SharePoint Online

- Have a Spreadsheet containing your metadata and file paths. You can see the example data we use below.

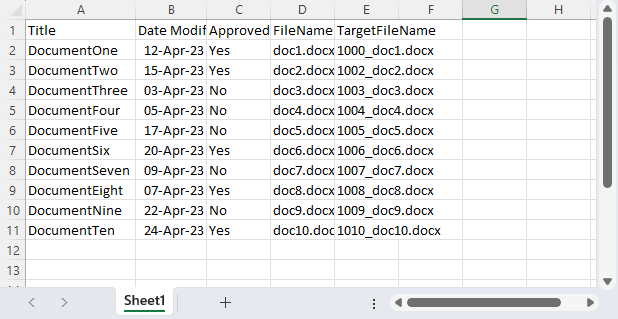

The Spreadsheet

In your Excel spreadsheet you need to specify the name of the document and any metadata you want to be uploaded alongside it. In our example we have a range of ten documents with different modified dates and different approval status’.

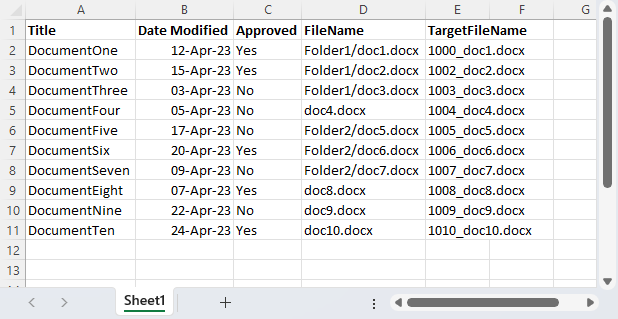

Documents in SubFolders

If your documents are contained within folders in your directory, you want to include the sub-directory folder name with the file name for example Folder1\doc1.docx. This will ensure that the document can be found, and your directory structure is replicated in the Document Library. An example spreadsheet can be seen here:

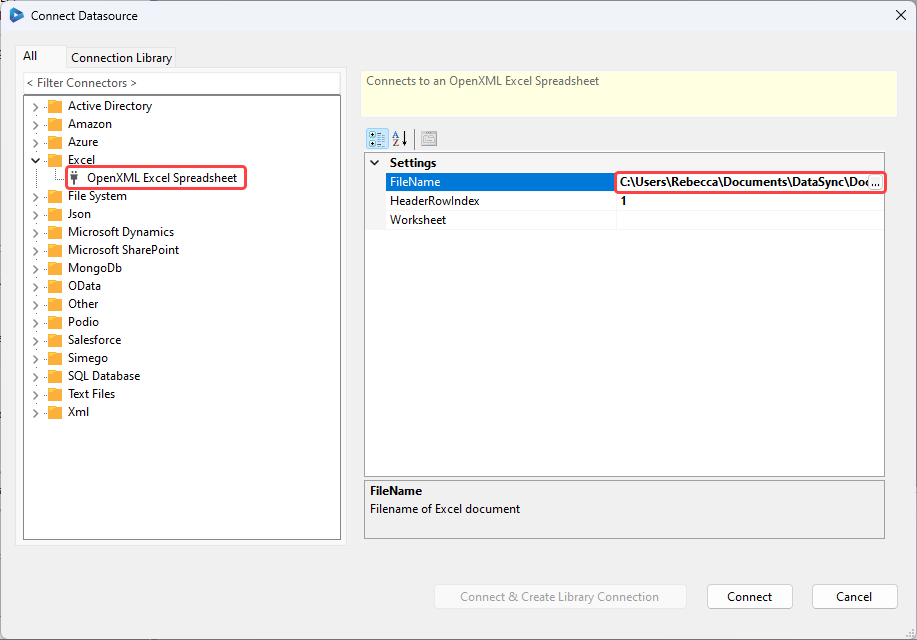

Connect to your Excel Spreadsheet

To get started open Data Sync and click onto Connect Datasource link in the source (data source A) window. This will open the connection window where you can connect to your spreadsheet.

To do this find Excel and expand the tree so that you can select the OpenXML Excel Spreadsheet provider from the list.

Under the FileName field click onto the ellipsis to open the file explorer and locate your spreadsheet containing your metadata. Then just click onto the Connect button to connect.

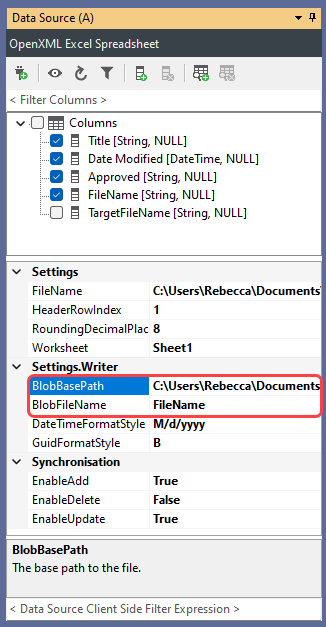

You will now need to define a couple of connection properties to find the files mentioned in the spreadsheet. In the connection properties window, below the source columns, go to the section Settings.Writer and complete the following fields:

- BlobBasePath : Enter in the file path to your directory of documents, in our example this is

C:\Users\Rebecca\Documents\Demo Documents. - BlobFileName : Choose the column from the spreadsheet that returns the name of the file, in this example it is FileName.

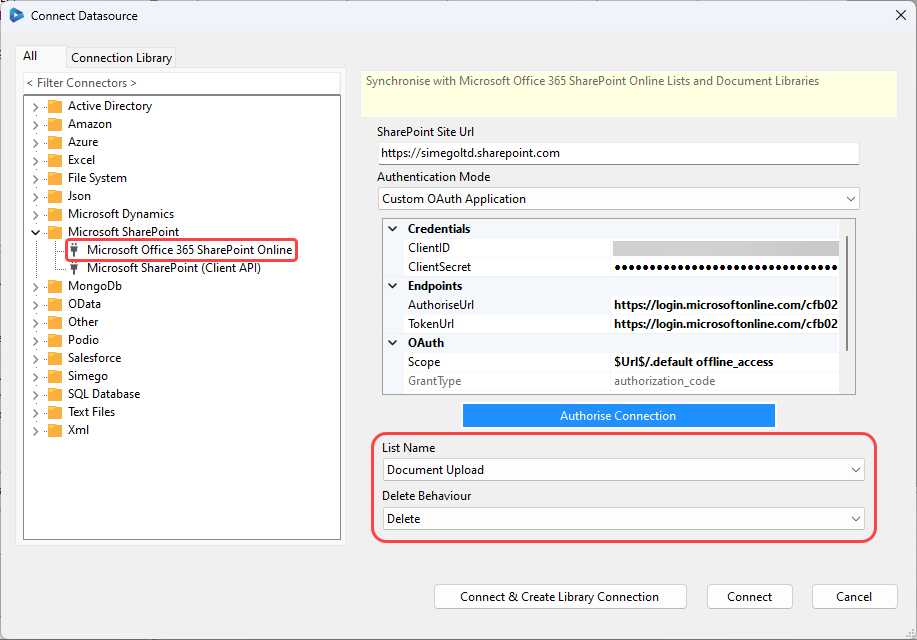

Connect to your SharePoint Document Library

Now you need to connect to your SharePoint Document Library as the Target. Click onto the Connect Datasource link in the Target (datasource B) window and go to the SharePoint section.

Then select SharePoint Online for connecting to a SharePoint Online site or SharePoint ClientAPI to connect to an On-Premise SharePoint site. More details on connecting to SharePoint On-Prem can be found here.

As we will need to write to the Modified column in SharePoint we need to use the Custom Azure App OAuth method to connect. Alternatively you can create the application registration in SharePoint using ACS. We have our full documentation on how to connect using ACS here.

You need to enter in the URL to the SharePoint site that the Document Library is located. In this example the Document Library is found in a subsite called Test, so our URL will look similar to: https://company.sharepoint.com/Test.

- To connect to the Root site the URL would look similar to:

https://company.sharepoint.com - To connect to a subsite of a subsite the URL would look similar to:

https://company.sharepoint.com/subsite/subsite2

Follow the details from the documentation to connect to SharePoint using your preferred method, and click Authorize Connection to connect.

Now you can select your Document Library from the dropdown list and set the delete behaviour.

We recommend saving the connection to the connection library so that future projects can be quickly set up, to do this click onto Connect and Create Library Connection and enter in a name for the connection. This will add a connection to your SharePoint site/sub-site in the connection library so that you can quickly access all the lists and libraries in the future. You only need to add the connection to each site/subsite once, as you can connect to any of the lists or libraries within that site from the Connection Library window.

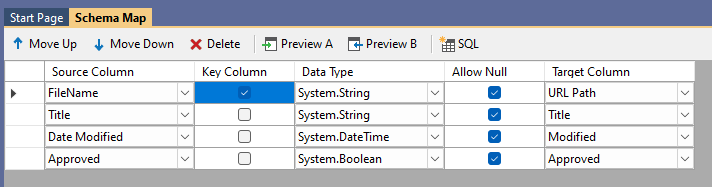

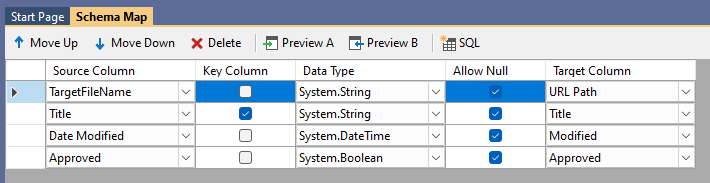

Map your Source and Target Columns

The next step is to map your source columns to your target columns. The mapping in your project may look slightly different if you use different column names.

At a minimum you need to map a source column to the URLPath column in SharePoint. In this example we map the FileName to the URLPath and set this to be the key column.

The key column must be unique and be able to distinguish each document from each other. As there can not be duplicate filenames this works for the key column.

We're also adding a Title, an approved status and a modified date to each document. You can see the full schema mapping for this example below:

If you are writing a modified date against the file you will need to use the custom OAuth option and assign the relevant permissions to the app. Without this the modified date will be set to the date the files were uploaded (today)

If you have specified the folder structure that the file is found in, in the FileName column this will be handled by Data Sync and the relevant folder will be created in SharePoint if it does not already exist.

You can map a different filename to the one you specified in the BlobFileName field earlier. Additionally the column specified in the BlobFileName property on the source does not need to be included in the schema map.

This means that if you want your files to go to different folders or have a different name, you just need to map another filename column (such as TargetFileName in the example spreadsheet) to the URL Path in the schema map.

This would make the schema map look similar to this:

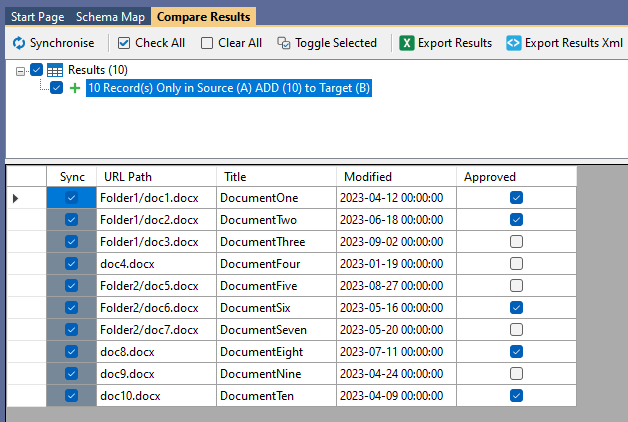

Compare and Preview the Data

Once the schema map has been configured and a key column set you can run the comparison and preview the results. To do this click onto the Run Compare button in the toolbar.

This will return the differences between the source and target data. Data Sync will present the additions, updates and deletes which you can preview by clicking onto each option.

Delete operations are always disabled by default on new Data Sync projects to enable Delete you need to set the EnableDelete property in the target connection to true.

For this example we have 10 records to add to the document library, and we can make sure that the data is appearing as we would expect.

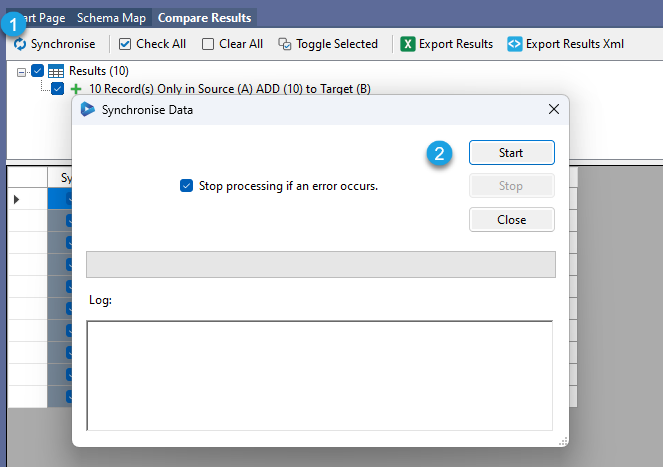

Synchronise

Once the comparison results look as expected, we can run the sync to synchronise the changes to SharePoint.

To do this click Synchronise and then Start to begin the sync.

To make sure everything was successful you can run the compare again, you should have 0 results, or you can browse to the Document Library in SharePoint.

Make sure to save your Data Sync project so that you can use it again in the future.

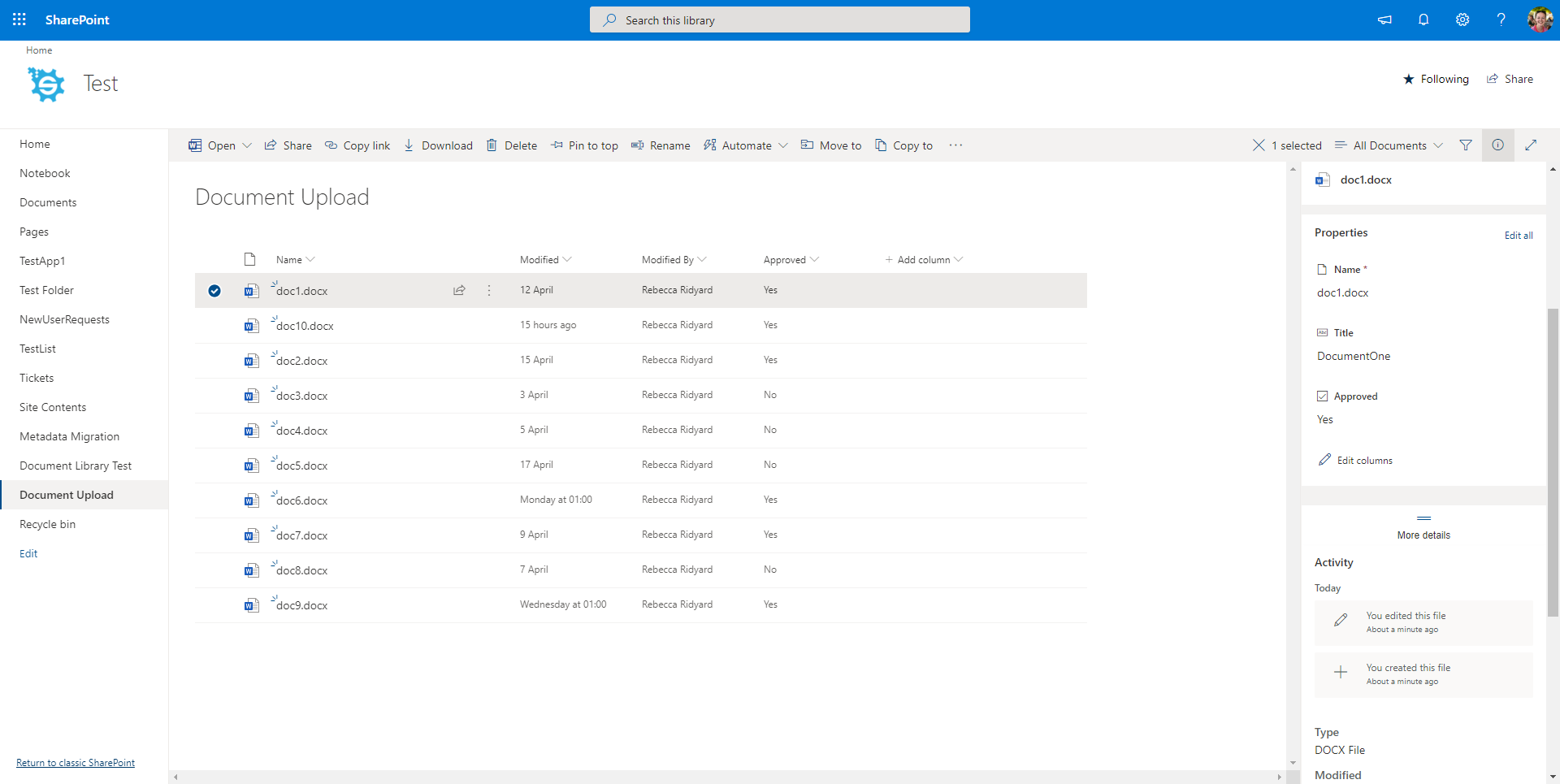

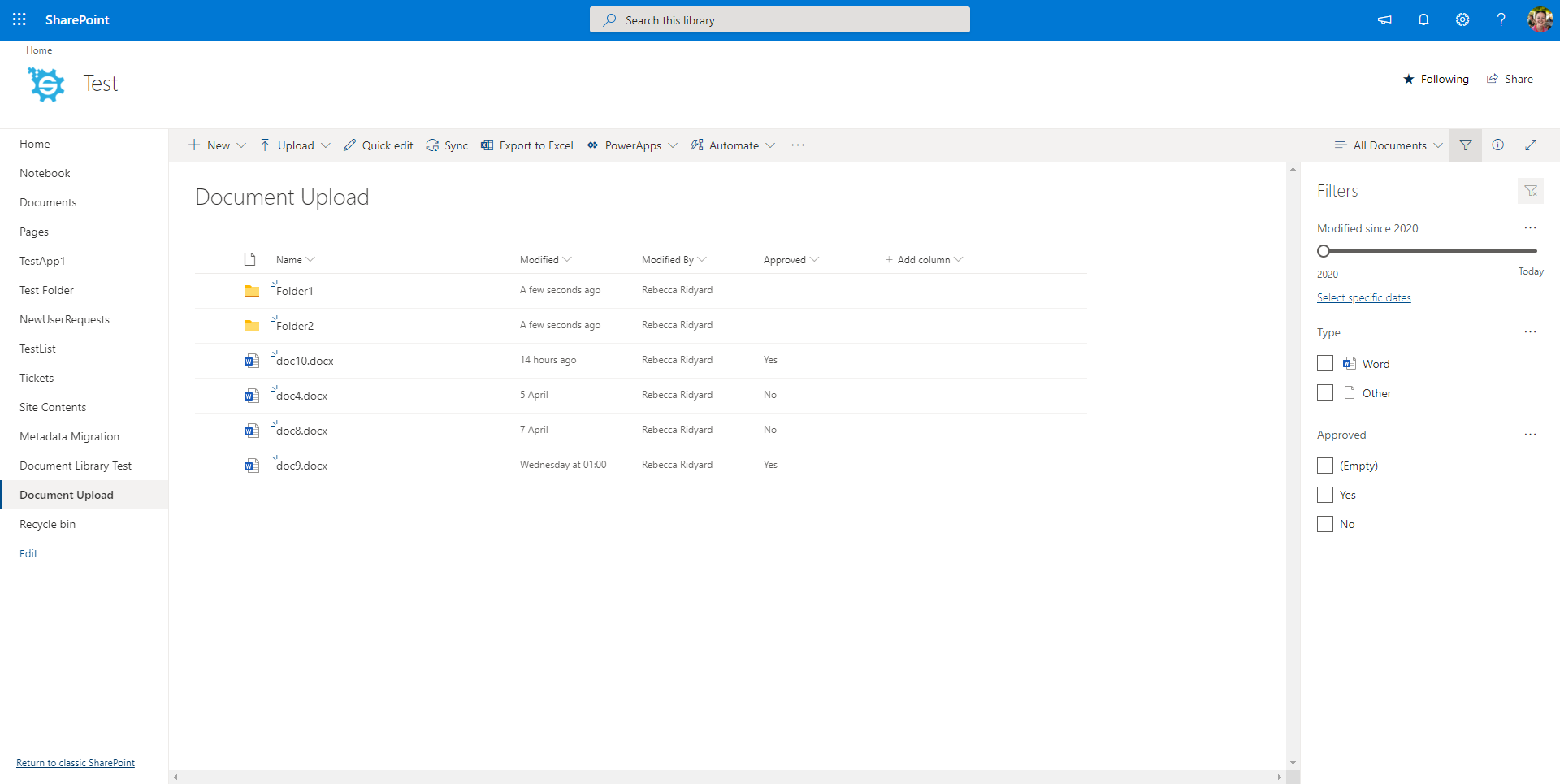

You should now be able to see all the documents added with their corresponding metadata in your SharePoint Document Library.

Results with Folders

If you defined folders in your paths and your folders didn't already exist then they will be created in SharePoint and the relevant files added. If the folders already existed then the files will simply be added to the matching folder.

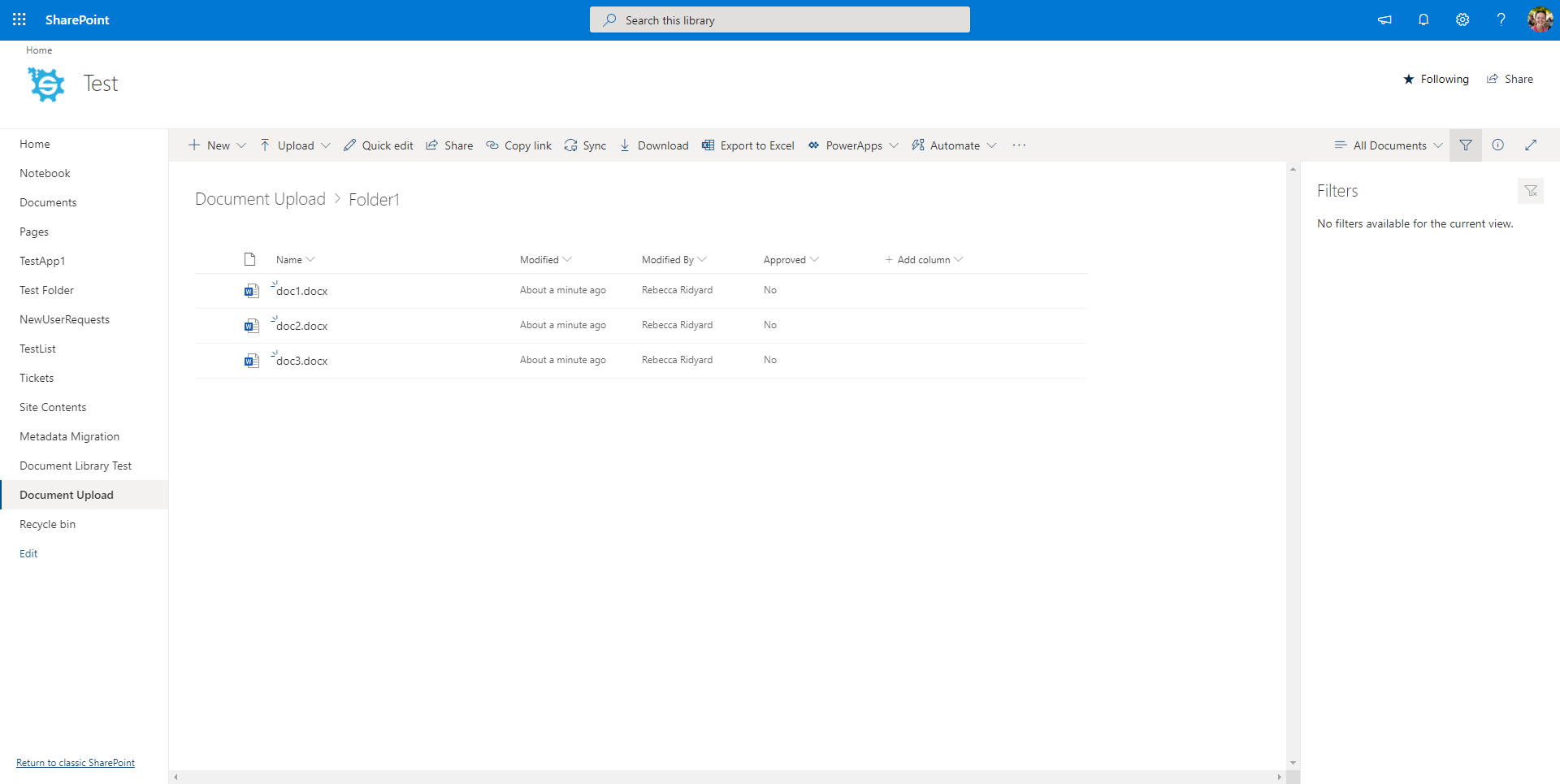

From the example data where the files were listed inside folders, the SharePoint Document Library now reflects this:

And clicking inside a folder will show the documents defined to be in that folder.

If you haven't already, make sure to save your Data Sync project so that you can schedule it to run on a regular basis or use it again when you need to.

Automate and Schedule

Now that your project is configured you can save it and then schedule it to run via the Data Sync Run Tool, Windows Task Scheduler, or Ouvvi Automation Server.

You may want to automate your project so that it runs on a regular basis, to do this you can use either the Run Tool or Ouvvi.

Ouvvi gives more options for scheduling, with both time and event based triggers. So you could configure a trigger to start your project whenever a change is detected in your SQL table or SharePoint List. Note that this requires using a modified date time stamp in your SQL table.

Alternatively you can use the Run Tool to schedule the project to run using Windows Task Scheduler.

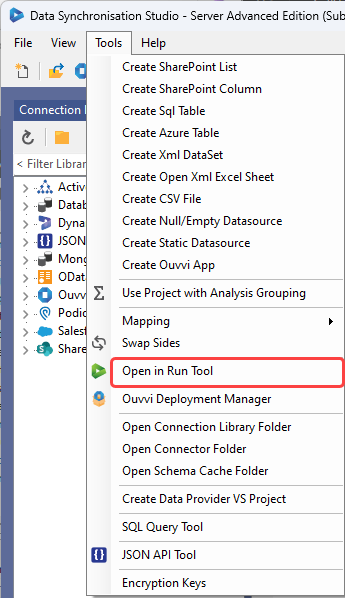

Run via the Run Tool

The Run Tool is an additional program that comes linked to Data Sync and enables you to build out your data integration jobs.

You can use this to group Data Sync projects that need to run in order and add additional step types such as adding an status report to email you when the project has run and if there were any failures. Each step is run in the order it appears in the list and you can apply conditional rules so that the next step can run if the previous one succeeded or had data changes for example. To find out more please see our Run Tool Documentation.

To open your project in the Run Tool, in your Data Sync project open the Tools menu and select Open in Run Tool.

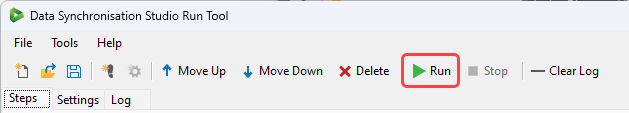

You can then click onto the green Run button to check it runs as expected. If there are data changes you will be provided with a count of how many items were added updated or deleted

Run via the Command Line

Another option is to Run the Run Tool project or single Data Sync project from the command line. To do this pass the path to the project file like this:

Run Tool Project

%DATASYNCINSTALLPATH%\Simego.DataSync.Run.exe -execute "D:\DSProjects\myproject.dsrun"

Data Sync Project

%DATASYNCINSTALLPATH%\Simego.DataSync.Run.exe -execute "D:\DSProjects\myproject.dsprj"

Automate with Windows Task Scheduler

You can then schedule your Run Tool project to run when you need it to using Windows Task Scheduler. For the full details on how to do this see our Task Scheduler Documentation.

Automate with Ouvvi

An alternative option, with more scheduling capabilities is to use Ouvvi Automation Server. Ouvvi enables you to fully schedule and manage all of your Data Integration Projects, from Data Sync projects to SQL Statements to Powershell Scripts.

It provides full logging and documentation capabilities to fully manage your integration operations.

To find out more see our Ouvvi Documentation or send us an email.